AB Testing (Single message campaigns)

A/B testing (also known as split testing) is a powerful way to improve your email campaigns by testing different versions of your message with real customers. Instead of guessing what will perform best—like which subject line gets more opens or which CTA drives more clicks—you can run experiments and let the data show you what works.

With Vero 2.0, you can create up to 20 variations of your email, track how each one performs, and choose how you’d like to run your test: you can either send each version to a fixed portion of your audience (Manual mode), or let Vero automatically send the best-performing version to the rest of your audience after a short test period (Pick a winner mode).

A/B testing works best when you have a larger audience—this ensures your results are statistically significant and not just random. As a general guide:

- With 1,000+ recipients per variation, you’re more likely to get meaningful results.

- With fewer than 300 recipients per variation, it’s harder to reach statistical significance, even if the numbers look different.

Vero uses proven statistical models to determine winners with a 95% confidence level, so you can trust the results and make smarter decisions based on real customer behaviour.

Whether you’re trying to boost open rates, increase conversions, or reduce unsubscribes, A/B testing gives you a reliable, data-backed way to continuously improve your email strategy.

Which type of campaigns can you A/B test?

- Campaigns triggered Immediately and Scheduled for a data and time in the future

- Recurring trigger and Event triggered campaigns cannot be A/B tested at this time, however these will be available in the future.

- Single message campaigns. A/B Testing for multi-message campaigns will be added in a future update.

- Email and SMS campaigns. However SMS does not currently support 'pick a winner' A/B tests.

What can you A/B test?

- Subject lines and pre-headers

- From addresses

- Body content

You can add up to 20 variations per test, giving you room to experiment with bold ideas or fine-tune subtle differences.

Creating an A/B Test

To create an A/B test for a one off message:

- Open or create a new ‘single message’ campaign.

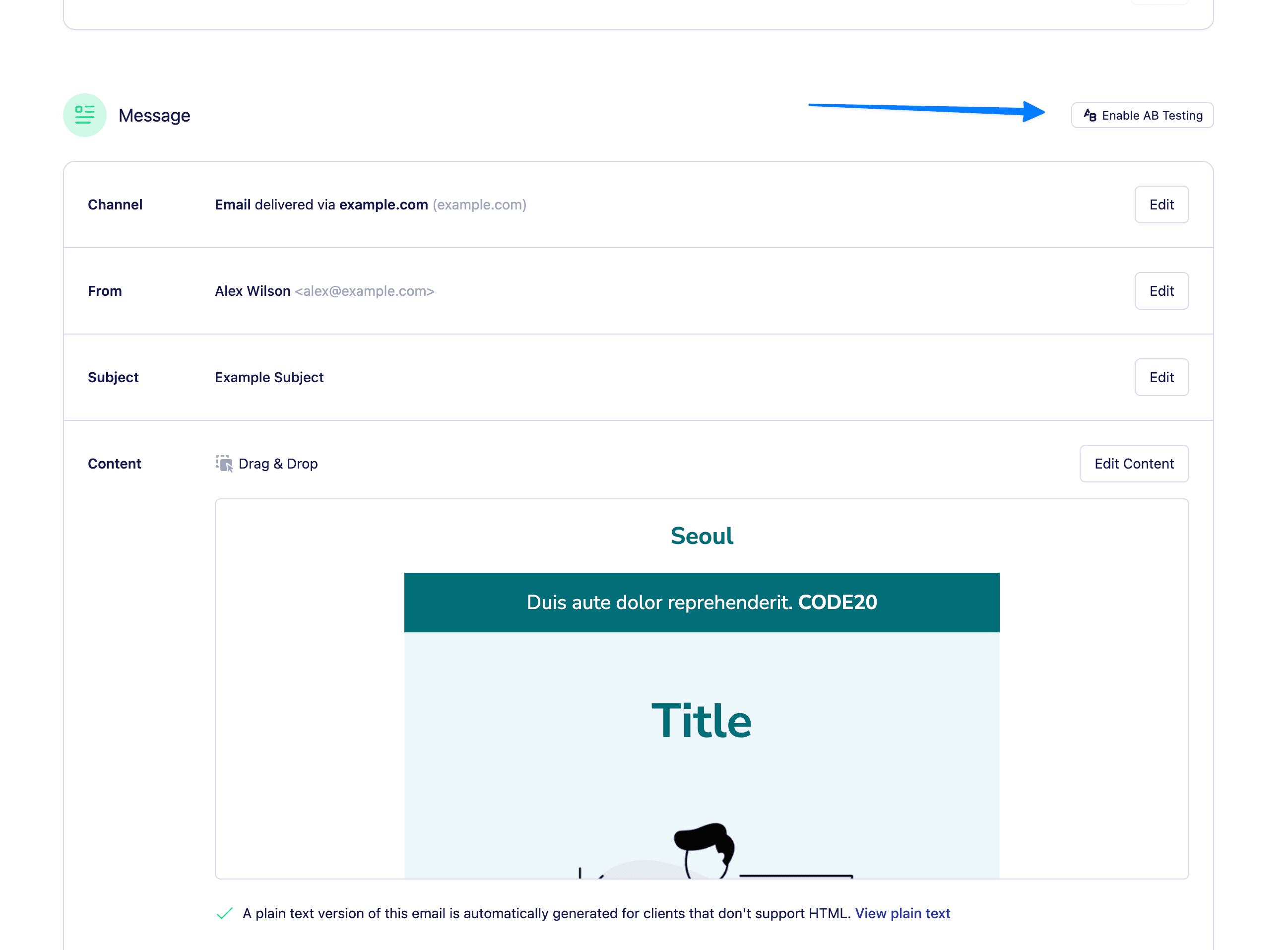

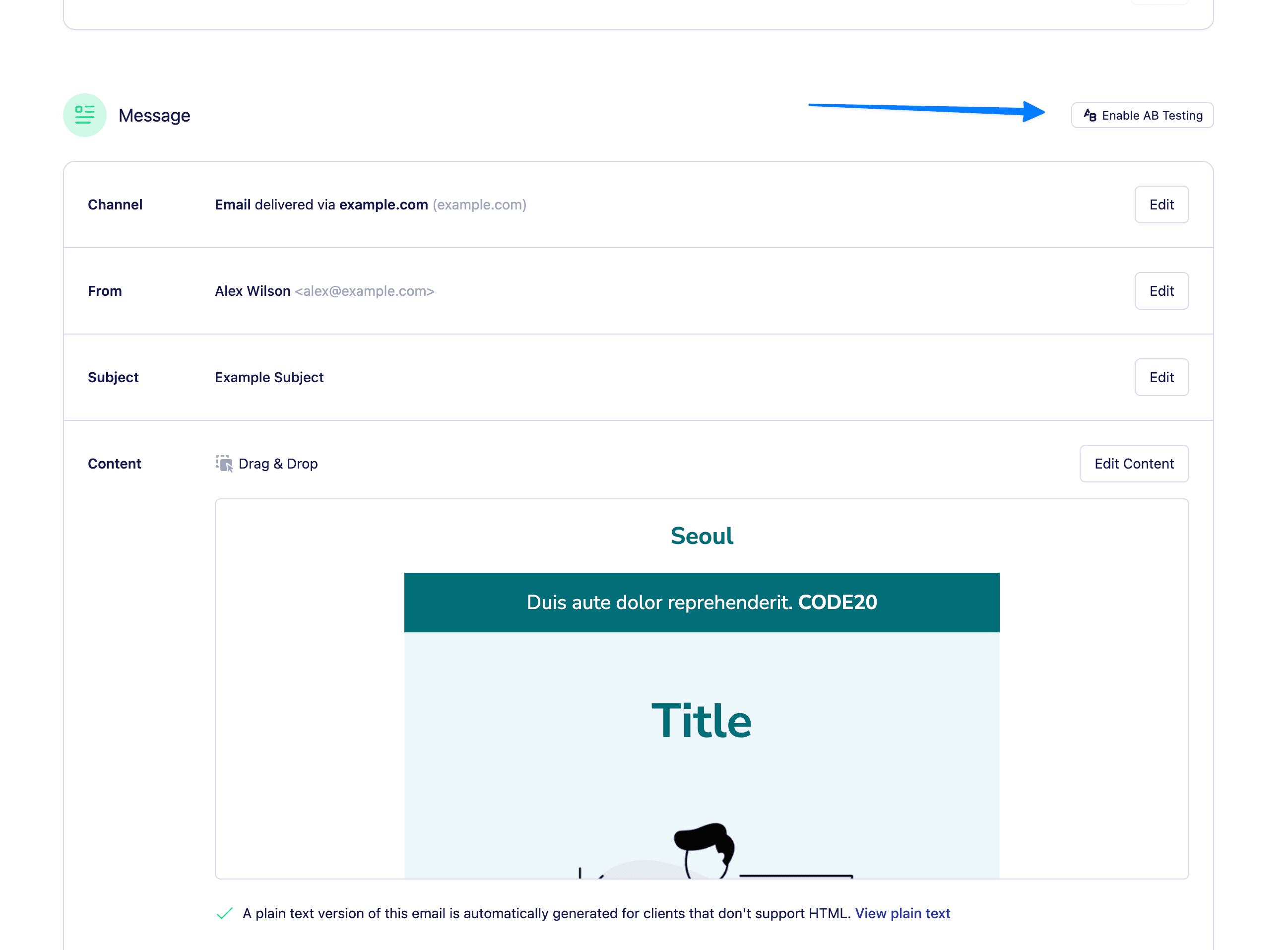

- Scroll to the Content section and toggle “Enable A/B Testing”.

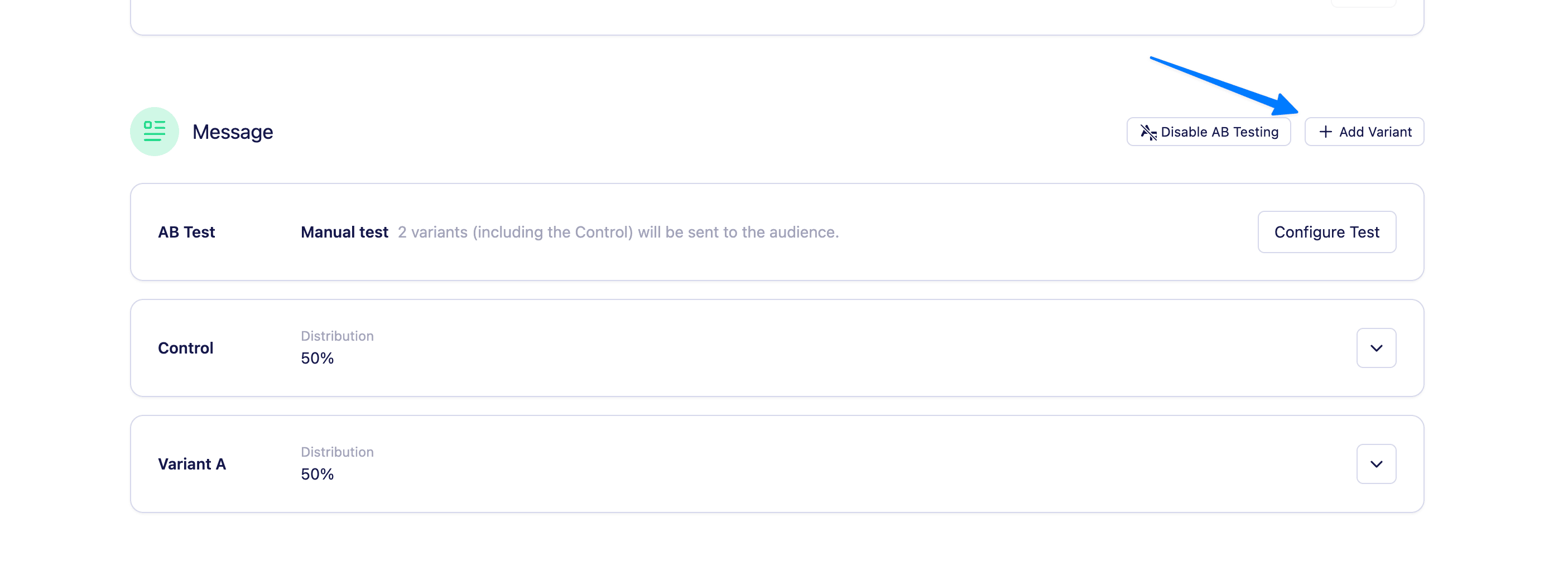

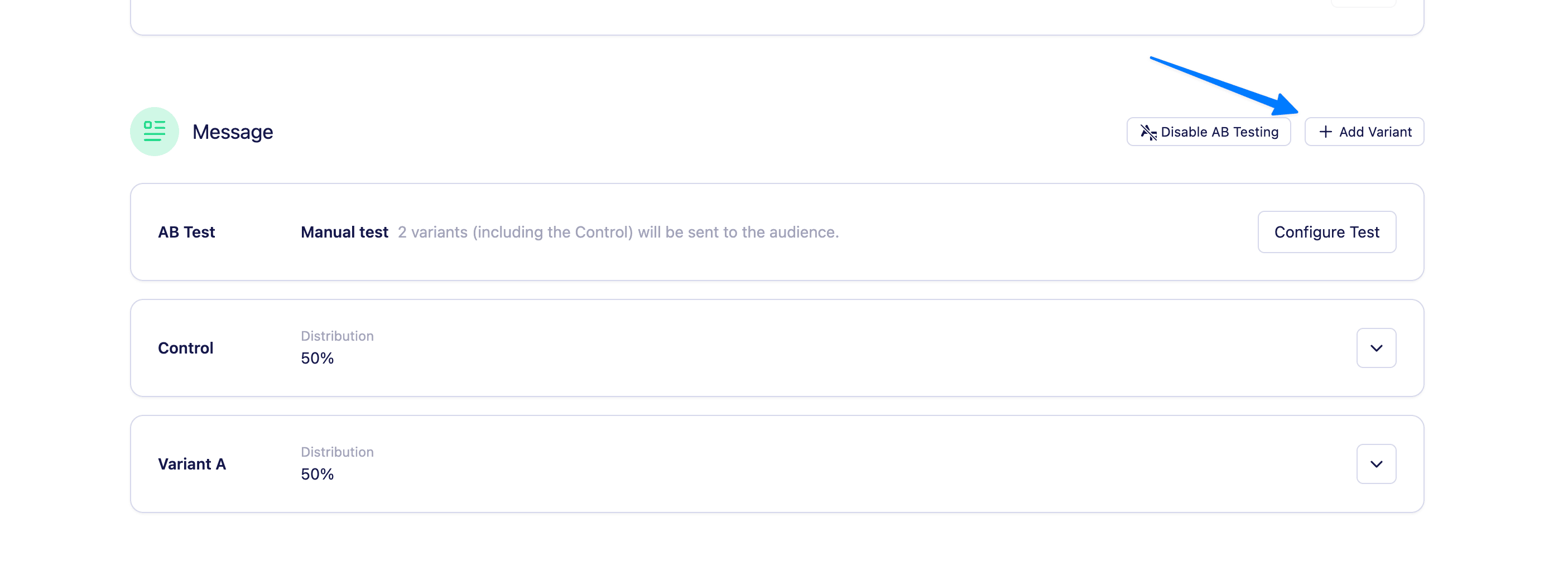

- Use the “Add variant” button to create up to 20 variants.

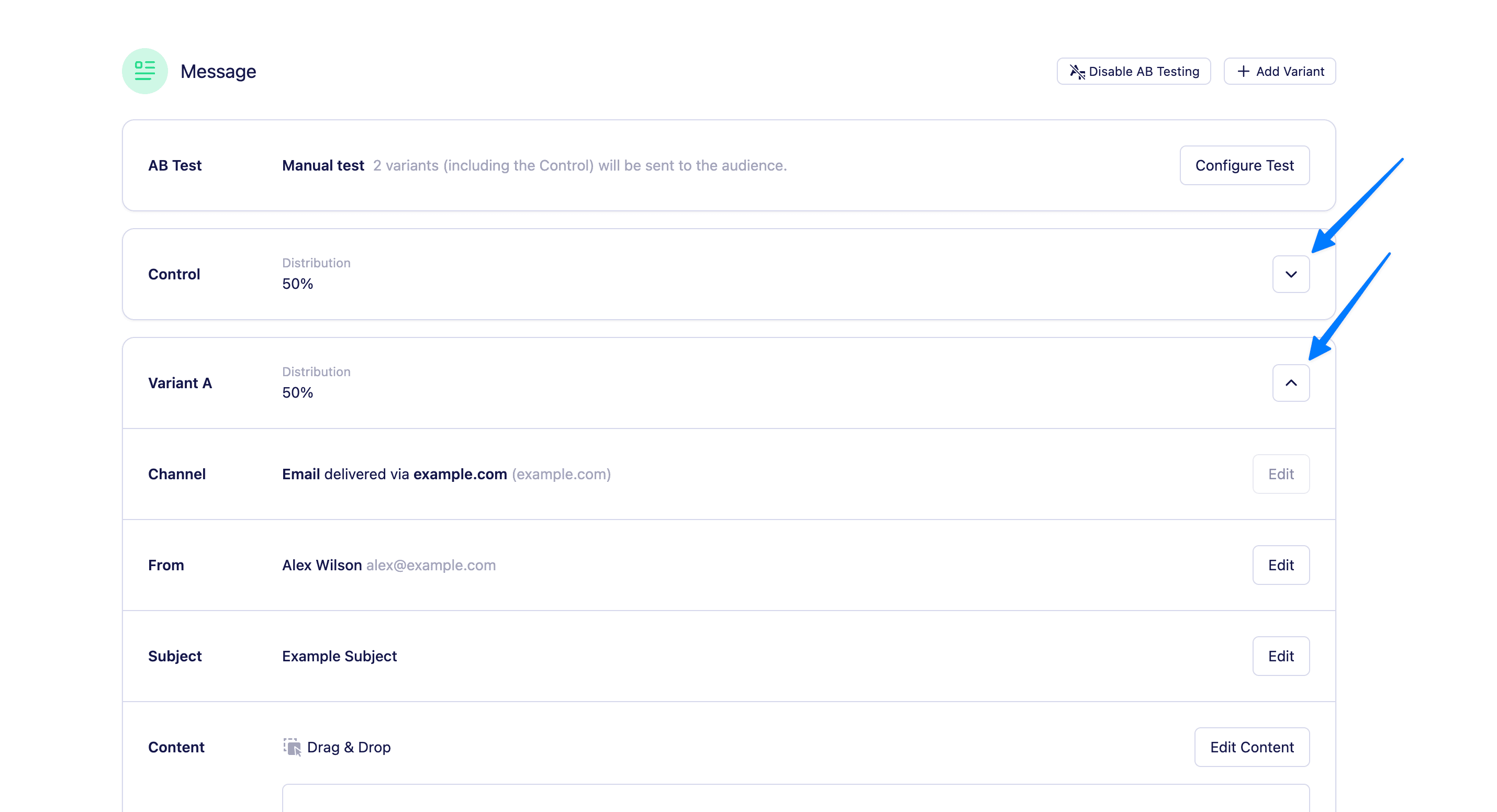

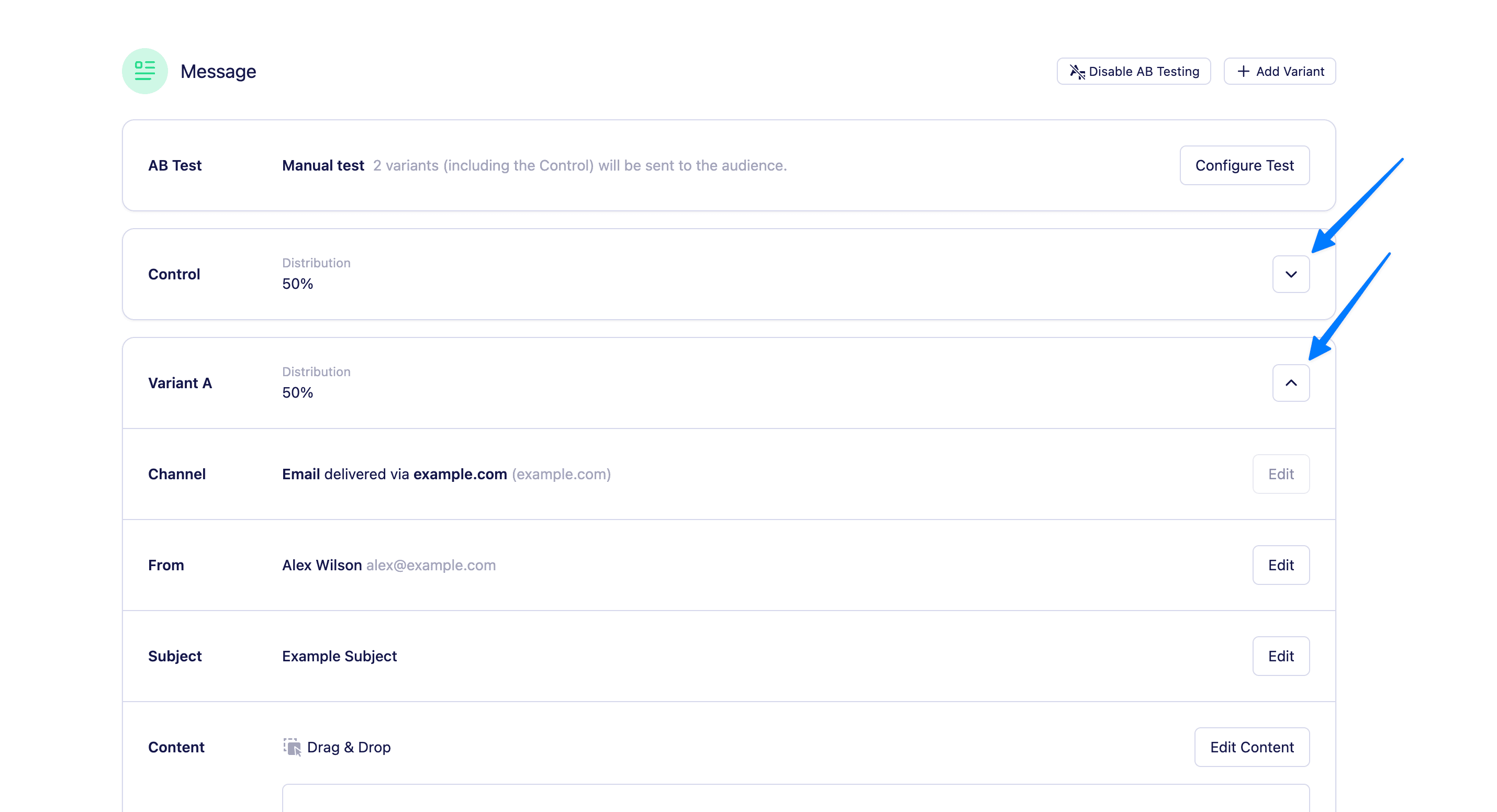

- Click the show/hide button next to each variant to view and edit the subject, from address, and content fields.

Need to remove a variation? Just click the trash can icon next to it. Want to remove A/B testing altogether? Delete all variations and click the ‘Disable A/B test button’.

The channel, channel provider and message settings may only be edited on the control variant.

Configuring your test

Once you’ve added your variations, choose how you want to run your test. Vero 2.0 offers two test modes, accessible by clicking ‘Configure test’ on the ‘AB Test’ panel.

1. Manual

With Manual testing, you control exactly how many people receive each variation.

- Set the distribution of your split test between the variants (e.g. 50/50, 70/30, etc.)

- Vero will randomly distribute the messages based on your configuration.

- Schedule your newsletter as usual—all variations will send at the same time.

- View the insights for your ab test post send and use those results to inform your next campaign.

2. Pick a Winner

Let Vero choose the best-performing version, automatically. (Not currently supported by SMS campaigns)

Here’s how it works:

- Toggle on “Pick a winner”.

- Use the distribution slider to decide what percentage of your audience should receive the test (e.g. 20%).

- Set the test duration in hours (e.g. 4 hours).

- Choose your winning metric:

- Opens

- Clicks

- Conversions (coming soon)

- Unsubscribes (lowest wins)

- Deliveries

- Choose your calculation method:

- Statistical significance (default): This option uses a 95% confidence method. The statistical method used a t-test to compare how each variation performed based on your chosen metric (like opens or clicks). For example, if your control email gets 220 opens from 1,000 sends (22%) and your variation gets 260 opens from 1,000 sends (26%), we calculate a p-value to see if that difference is likely due to the variation—or just random chance. If the p-value is below 0.05, the result is considered statistically significant, meaning we’re 95% confident that repeating the test would produce a similar result, and Vero will send it to the rest of your audience. If not, we’ll stick with the original (control) version.

- Biggest number wins: If you choose this simple volume comparison method, Vero will select the variation that had the highest number for your chosen metric—no statistical calculations involved. For example, if Variation A gets 220 clicks and Variation B gets 260 clicks, Variation B is chosen as the winner, even if the difference might just be due to chance. This method is quick and easy, but keep in mind it doesn’t account for audience size or variability, so it’s best used for fast decisions when you don’t need statistical confidence.

After the test window ends, the winner is automatically sent to the remaining audience. If there’s no clear winner, the original email (control) is sent.

Viewing reports for your A/B test

You can view a breakdown of results on the Insights tab of the campaign. From there you can see a comparison of the following stats for each variant in the test:

- Opens

- Clicks

- Conversions

- Unsubscribes

- And the winning variation (if applicable)

You can also select and view each individual variant to see more granular results.

AB Testing with Send in Batches

If you’re using A/B testing with the “Pick a winner” mode and your campaign is set to send in batches, here’s how it works:

Vero will apply your batch settings separately to both the test group and the winning group.

That means:

- The test group will be sent out in batches according to your configured batch size and interval.

- Once the test is complete and a winner is selected, the winning group will also send in batches—using the same settings.

💡 Important: The test duration countdown doesn’t begin until all test group batches have finished sending. So depending on your batch settings, this could delay when the winning version gets sent.

Have questions or want help setting up your first test? Reach out to our support team — we’re here to help!